AI-Generated Circuit Boards

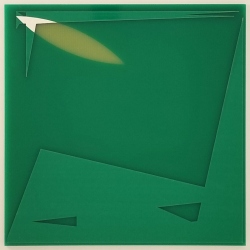

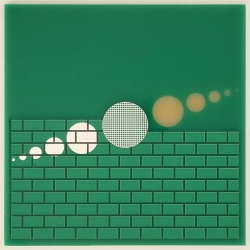

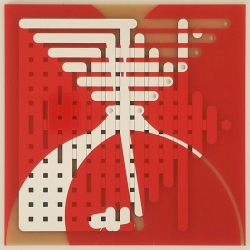

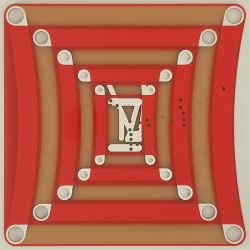

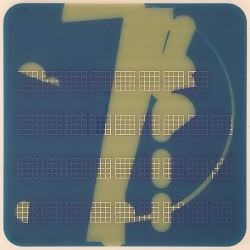

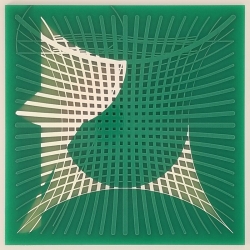

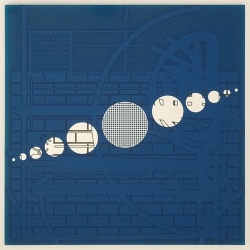

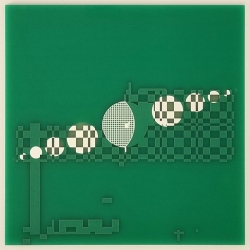

As an extension of my “PCB Drawings” series (consisting of circuit boards designed as art object), I’ve trained an AI network on my circuit board models to generate its own circuit boards. In more detail, I’ve designed a simple 5-level NLP network to learn from the Eagle circuit board models. The network consists of (in order) an embedding layer, 2 bi-directional LSTM layers, one attention layer, and a dense, softmax activated layer. The dataset consisted of a corpus of 121 circuit board models (see below for details). The network took about 11 hours of training on an Nvidia RTX2080 GPU. Below, you can see a gallery of circuit boards generated by the AI afterwards. In the next iteration of the project, I would like to use some more advanced NLP architectures, such as Bert or GPT-3.

Technical Notes

Eagle PCB boards are XML-based, looking something like this:

<board>

<plain>

<wire x1="0" y1="0" x2="100" y2="0" width="0" layer="20" curve="-10"/>

<wire x1="100" y1="0" x2="100" y2="100" width="0" layer="20"/>

<wire x1="100" y1="100" x2="0" y2="100" width="0" layer="20" curve="-10"/>

<wire x1="0" y1="100" x2="0" y2="0" width="0" layer="20"/>

<circle x="11.5" y="88.5" radius="1.5" width="3" layer="1"/>

<circle x="22.5" y="77.5" radius="1.5" width="3" layer="1"/>

<circle x="33.5" y="66.5" radius="1.5" width="3" layer="1"/>

<circle x="44.5" y="55.5" radius="1.5" width="3" layer="1"/>

<circle x="44.5" y="44.5" radius="1.5" width="3" layer="1"/>NLP models train on language tokens, which can be either entire words or characters. For my experiments, I’ve used character-based tokens, since all those floating-point numbers cannot be expressed as word-level tokens (there’s an infinite number of them). Also, in order to speed up training and avoid issues around XML syntax correctness (e.g. open ‘<‘ not being closed), I’ve encoded the Eagle models into sequences of characters like this one (this is the encoding of the XML fragment above):

B N W Ẋ0.0 Ẏ0.0 Ẍ100.0 Ῡ0.0 Ẁ0 Ḽ20 Ϫↄ Ḉ-10 Ո○ w W Ẋ100.0 Ẏ0.0 Ẍ100.0 Ῡ100.0 Ẁ0 Ḽ20 Ϫↄ Ḉ0 Ո○ w W Ẋ100.0 Ẏ100.0 Ẍ0.0 Ῡ100.0 Ẁ0 Ḽ20 Ϫↄ Ḉ-10 Ո○ w W Ẋ0.0 Ẏ100.0 Ẍ0.0 Ῡ0.0 Ẁ0 Ḽ20 Ϫↄ Ḉ0 Ո○ w C Ӿ11.5 Ұ88.5 ₨1.5 Ẁ3.0 Ḽ1 c C Ӿ22.5 Ұ77.5 ₨1.5 Ẁ3.0 Ḽ1 c C Ӿ33.5 Ұ66.5 ₨1.5 Ẁ3.0 Ḽ1 c C Ӿ44.5 Ұ55.5 ₨1.5 Ẁ3.0 Ḽ1 c C Ӿ44.5 Ұ44.5 ₨1.5 Ẁ3.0 Ḽ1 cWith this extra encoding step, I’ve managed to avoid a lot of problems related to correctness and reduce the training time to allow for experimenting with multiple network configurations.